Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Access Live Azure Data Lake Storage Data in AWS Lambda (with IntelliJ IDEA)

Connect to live Azure Data Lake Storage data in AWS Lambda using IntelliJ IDEA and the CData JDBC Driver to build the function.

AWS Lambda is a compute service that lets you build applications that respond quickly to new information and events. AWS Lambda functions can work with live Azure Data Lake Storage data when paired with the CData JDBC Driver for Azure Data Lake Storage. This article describes how to connect to and query Azure Data Lake Storage data from an AWS Lambda function built with Maven in IntelliJ.

With built-in optimized data processing, the CData JDBC Driver offers unmatched performance for interacting with live Azure Data Lake Storage data. When you issue complex SQL queries to Azure Data Lake Storage, the driver pushes supported SQL operations, like filters and aggregations, directly to Azure Data Lake Storage and utilizes the embedded SQL engine to process unsupported operations client-side (often SQL functions and JOIN operations). In addition, its built-in dynamic metadata querying allows you to work with and analyze Azure Data Lake Storage data using native data types.

Gather Connection Properties and Build a Connection String

Download the CData JDBC Driver for Azure Data Lake Storage installer, unzip the package, and run the JAR file to install the driver. Then gather the required connection properties.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Azure AD for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

NOTE: To use the JDBC driver in an AWS Lambda function, you will need a license (full or trial) and a Runtime Key (RTK). For more information on obtaining this license (or a trial), contact our sales team.

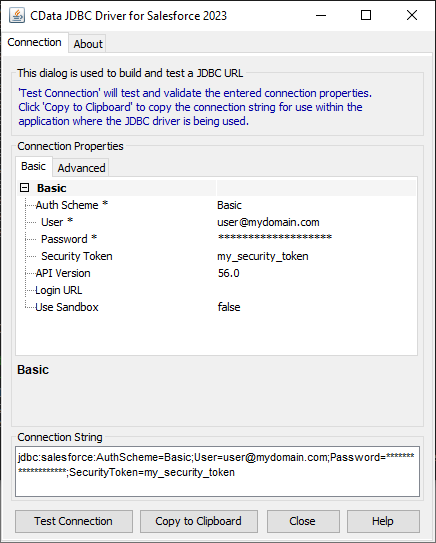

Built-in Connection String Designer

For assistance constructing the JDBC URL, use the connection string designer built into the Azure Data Lake Storage JDBC Driver. Double-click the JAR file or execute the jar file from the command line.

java -jar cdata.jdbc.adls.jar

Fill in the connection properties (including the RTK) and copy the connection string to the clipboard.

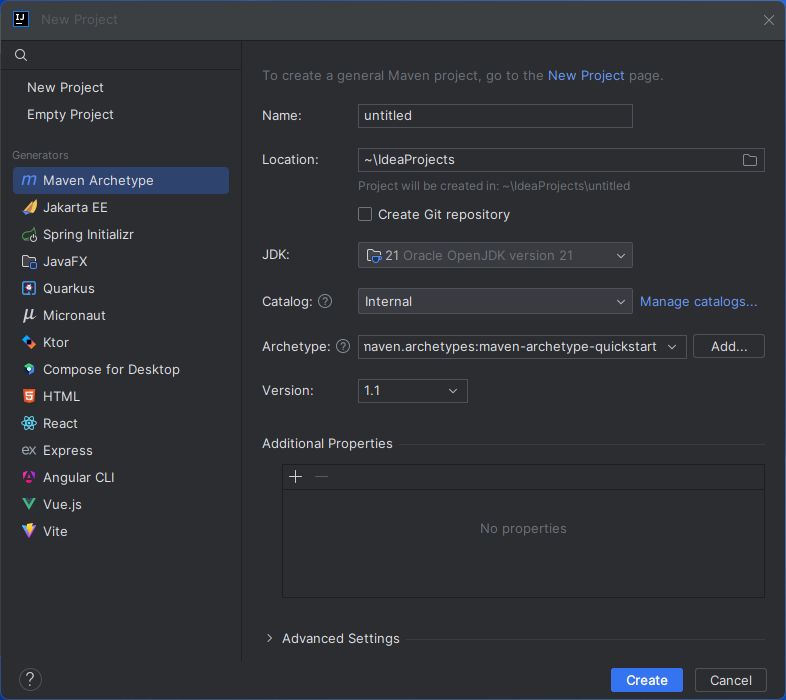

Create a Project in IntelliJ

- In IntelliJ IDEA, click New Project.

- Select "Maven Archetype" from the Generators

- Name the project and select "maven.archetypes:maven-archetype-quickstart" Archetype.

- Click "Create"

![]()

Install the CData JDBC Driver for Azure Data Lake Storage JAR File

Use the following Maven command from the project's root folder to install JAR file in the project.

mvn install:install-file -Dfile="PATH/TO/CData JDBC Driver for Azure Data Lake Storage 20XX/lib/cdata.jdbc.adls.jar" -DgroupId="org.cdata.connectors" -DartifactId="cdata-adls-connector" -Dversion="23" -Dpackaging=jar

Add Dependencies

Within the Maven project's pom.xml file, add AWS and the CData JDBC Driver for Azure Data Lake Storage as dependencies (within the <dependencies> element) using the following XML.

- AWS

<dependency> <groupId>com.amazonaws</groupId> <artifactId>aws-lambda-java-core</artifaceId> <version>1.2.2</version> <!--Replace with the actual version--> </dependency> - CData JDBC Driver for Azure Data Lake Storage

<dependency> <groupId>org.cdata.connectors</groupId> <artifactId>cdata-adls-connector</artifaceId> <version>23</version> <!--Replace with the actual version--> </dependency>

Create an AWS Lambda Function

For this sample project, we create two source files: CDataLambda.java and CDataLambdaTest.java.

Lambda Function Definition

- Update CDataLambda to implement the RequestHandler interface from the AWS Lambda SDK. You will need to add the handleRequest method, which performs the following tasks when the Lambda function is triggered:

- Constructs a SQL query using the input.

- Sets up AWS credentials and S3 configuration to store OAuth credentials.

- Registers the CData JDBC driver for Azure Data Lake Storage.

- Establishes a connection to Azure Data Lake Storage using JDBC.

- Executes the SQL query on Azure Data Lake Storage.

- Prints the results to the console.

- Returns an output message.

- Add the following import statements to the Java class:

import java.sql.Connection; import java.sql.DriverManager; import java.sql.ResultSet; import java.sql.ResultSetMetaData; import java.sql.SQLException; import java.sql.Statement; Replace the body of the handleRequest method with the code below. Be sure to fill in the connection string in the DriverManager.getConnection method call.

String query = "SELECT * FROM " + input; // Set your AWS credentials String awsAccessKey = "YOUR_AWS_ACCESS_KEY"; String awsSecretKey = "YOUR_AWS_SECRET_KEY"; String awsRegion = "YOUR_AWS_REGION"; // AWS S3 Configuration AmazonS3 s3Client = AmazonS3ClientBuilder.standard() .withRegion(awsRegion) .withCredentials(new AWSStaticCredentialProvider(new BasicAWSCredentials(awsAccessKey, awsSecretKey))) .build(); String bucketName = "MY_AWS_BUCKET"; String oauthSettings = "S:3//"+ bucketName + "/OAuthSettings.txt"; String oauthConnection = "InitiateOAuth=REFRESH;" + "OAuthSettingsLocation=" + oauthSettings = ";" try { Class.forName("cdata.jdbc.adls.ADLSDriver"); cdata.jdbc.adls.ADLSDriver driver = new cdata.jdbc.adls.ADLSDriver(); DriverManager.registerDriver(driver); } catch (SQLException ex) { } catch (ClassNotFoundException e) { throw new RuntimeException(e); } Connection connection = null; try { connection = DriverManager.getConnection("jdbc:cdata:adls:RTK=52465...;Schema=ADLSGen2;Account=myAccount;FileSystem=myFileSystem;AccessKey=myAccessKey;" + oauthConnection + ""); } catch (SQLException ex) { context.getLogger().log("Error getting connection: " + ex.getMessage()); } catch (Exception ex) { context.getLogger().log("Error: " + ex.getMessage()); } if(connection != null) { context.getLogger().log("Connected Successfully!\n"); } ResultSet resultSet = null; try { //executing query Statement stmt = connection.createStatement(); resultSet = stmt.executeQuery(query); ResultSetMetaData metaData = resultSet.getMetaData(); int numCols = metaData.getColumnCount(); //printing the results while(resultSet.next()) { for(int i = 1; i <= numCols; i++) { System.out.printf("%-25s", (resultSet.getObject(i) != null) ? resultSet.getObject(i).toString().replaceAll("\n", "") : null ); } System.out.print("\n"); } } catch (SQLException ex) { System.out.println("SQL Exception: " + ex.getMessage()); } catch (Exception ex) { System.out.println("General exception: " + ex.getMessage()); } return "query: " + query + " complete";

Deploy and Run the Lambda Function

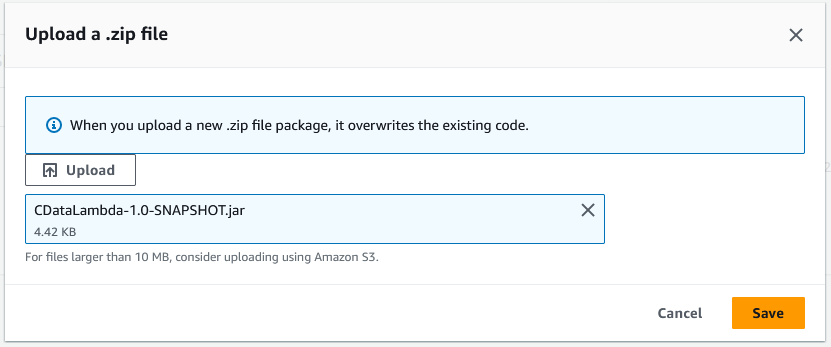

Once you build the function in Intellij, you are ready to deploy the entire Maven project as a single JAR file.

- In IntelliJ, use the mvn install command to build the SNAPSHOT JAR file.

- Create a new function in AWS Lambda (or open an existing one).

- Name the function, select an IAM role, and set the timeout value to a high enough value to ensure the function completes (depending on the result size of your query).

- Click "Upload from" -> ".zip file" and select your SNAPSHOT JAR file.

![Uploading the SNAPSHOT JAR file.]()

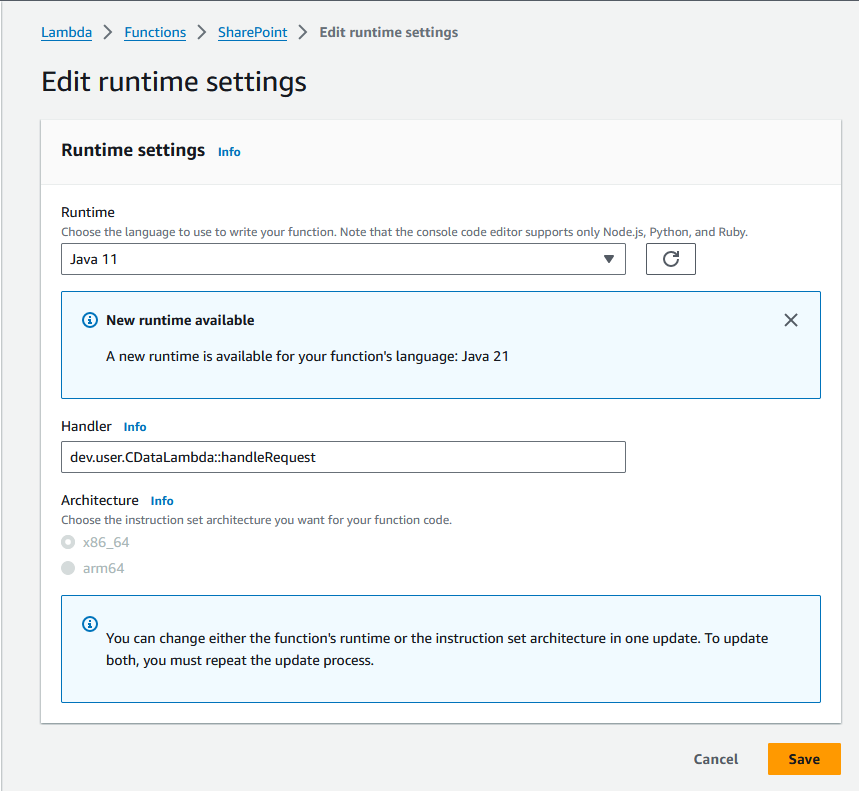

- In the "Runtime settings" section, click "Edit" and set Handler to your "handleRequest" method (e.g. package.class::handleRequest)

![Configuring the runtime handler.]()

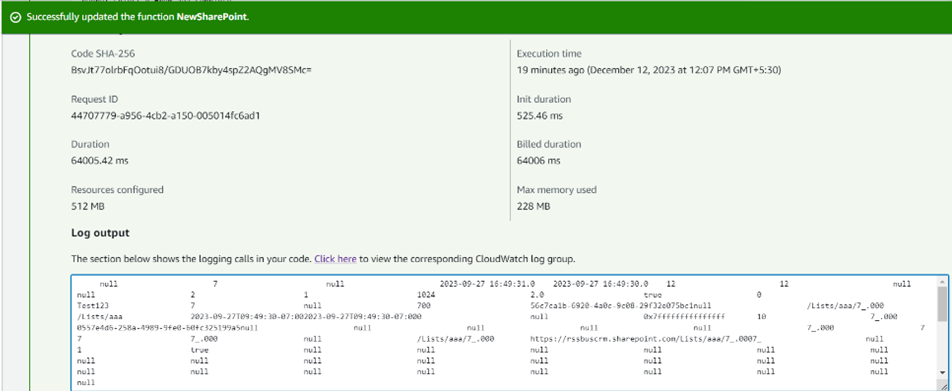

- You can now test the function. Set the "Event JSON" field to a table name and click, click "Test"

![Viewing the results.]()

Free Trial & More Information

Download a free, 30-day trial of the CData JDBC Driver for Azure Data Lake Storage and start working with your live Azure Data Lake Storage data in AWS Lambda. Reach out to our Support Team if you have any questions.