Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Use the API Server and Azure Data Lake Storage ADO.NET Provider in Microsoft Power BI

You can use the API Server to feed Azure Data Lake Storage data to Power BI dashboards. Simply drag and drop Azure Data Lake Storage data into data visuals on the Power BI canvas.

The CData API Server enables your organization to create Power BI reports based on the current Azure Data Lake Storage data (plus data from 200+ other ADO.NET Providers). The API Server is a lightweight Web application that runs on your server and, when paired with the ADO.NET Provider for Azure Data Lake Storage, provides secure OData services of Azure Data Lake Storage data to authorized users. The OData standard enables real-time access to the live data, and support for OData is integrated into Power BI. This article details how to create data visualizations based on Azure Data Lake Storage OData services in Power BI.

Set Up the API Server

Follow the steps below to begin producing secure Azure Data Lake Storage OData services:

Deploy

The API Server runs on your own server. On Windows, you can deploy using the stand-alone server or IIS. On a Java servlet container, drop in the API Server WAR file. See the help documentation for more information and how-tos.

The API Server is also easy to deploy on Microsoft Azure, Amazon EC2, and Heroku.

Connect to Azure Data Lake Storage

After you deploy the API Server and the ADO.NET Provider for Azure Data Lake Storage, provide authentication values and other connection properties needed to connect to Azure Data Lake Storage by clicking Settings -> Connection and adding a new connection in the API Server administration console.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Azure AD for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

When you configure the connection, you may also want to set the Max Rows connection property. This will limit the number of rows returned, which is especially helpful for improving performance when designing reports and visualizations.

You can then choose the Azure Data Lake Storage entities you want to allow the API Server access to by clicking Settings -> Resources.

Authorize API Server Users

After determining the OData services you want to produce, authorize users by clicking Settings -> Users. The API Server uses authtoken-based authentication and supports the major authentication schemes. Access can also be restricted based on IP address; by default, only connections to the local machine are allowed. You can authenticate as well as encrypt connections with SSL.

Connect to Azure Data Lake Storage

Follow the steps below to connect to Azure Data Lake Storage data from Power BI.

- Open Power BI Desktop and click Get Data -> OData Feed. To start Power BI Desktop from PowerBI.com, click the download button and then click Power BI Desktop.

-

Enter the URL to the OData endpoint of the API Server. For example:

http://MyServer:8032/api.rsc -

Enter authentication for the API Server. To configure Basic authentication, select Basic and enter the username and authtoken for a user of the OData API of the API Server.

The API Server also supports Windows authentication using ASP.NET. See the help documentation for more information.

-

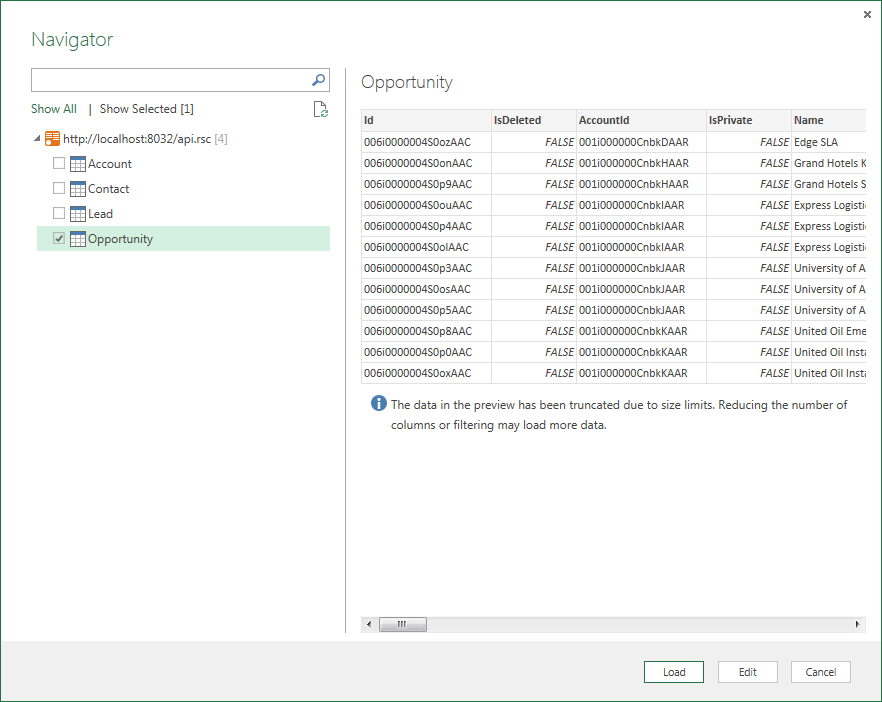

In the Navigator, select tables to load. For example, Resources.

Create Data Visualizations

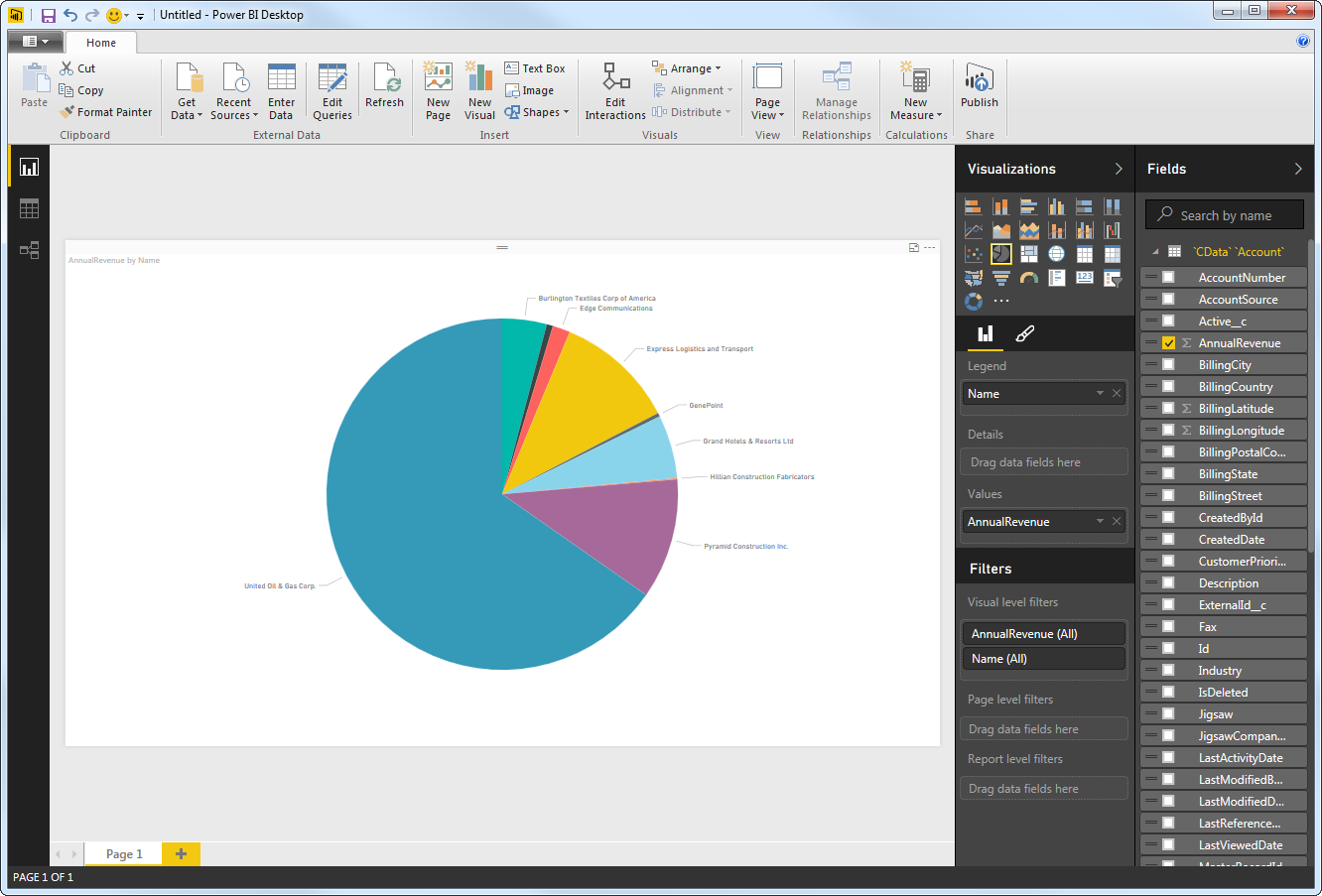

After pulling the data into Power BI, you can create data visualizations in the Report view. Follow the steps below to create a pie chart:

- Select the pie chart icon in the Visualizations pane.

- Select a dimension in the Fields pane: for example, FullPath.

- Select a measure in the Permission in the Fields pane: for example, Permission.

You can change sort options by clicking the ellipsis (...) button for the chart. Options to select the sort column and change the sort order are displayed.

You can use both highlighting and filtering to focus on data. Filtering removes unfocused data from visualizations; highlighting dims unfocused data.

You can highlight fields by clicking them:

You can apply filters at the page level, at the report level, or to a single visualization by dragging fields onto the Filters pane. To filter on the field's value, select one of the values that are displayed in the Filters pane.

Click Refresh to synchronize your report with any changes to the data.

Upload Azure Data Lake Storage Data Reports to Power BI

You can now upload and share reports with other Power BI users in your organization. To upload a dashboard or report, log into PowerBI.com, click Get Data in the main menu and then click Files. Navigate to a Power BI Desktop file or Excel workbook. You can then select the report in the Reports section.

Refresh on Schedule and on Demand

You can configure Power BI to automatically refresh your uploaded report. You can also refresh the dataset on demand in Power BI. Follow the steps below to schedule refreshes through the API Server:

- Log into Power BI.

- In the Dataset section, right-click the Azure Data Lake Storage Dataset and click Schedule Refresh.

- If you are hosting the API Server on a public-facing server like Azure, you can connect directly. Otherwise, if you are connecting to a feed on your machine, you will need to expand the Gateway Connection node and select a gateway, for example, the Microsoft Power BI Personal Gateway.

- In the settings for your dataset, expand the Data Source Credentials node and click Edit Credentials.

- Expand the Schedule Refresh section, select Yes in the Keep Your Data Up to Date menu, and specify the refresh interval.

You can now share real-time Azure Data Lake Storage reports through Power BI.