Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Create SSAS Tabular Models from Azure Data Lake Storage Data

How to build a SQL Server Analysis Service Tabular Model from Azure Data Lake Storage data using CData drivers.

SQL Server Analysis Services (SSAS) is an analytical data engine used in decision support and business analytics. It provides enterprise-grade semantic data models for business reports and client applications, such as Power BI, Excel, Reporting Services reports, and other data visualization tools. When paired with the CData ODBC Driver for Azure Data Lake Storage, you can create a tabular model from Azure Data Lake Storage data for deeper and faster data analysis.

Create a Connection to Azure Data Lake Storage Data

If you have not already, first specify connection properties in an ODBC DSN (data source name). This is the last step of the driver installation. You can use the Microsoft ODBC Data Source Administrator to create and configure ODBC DSNs.

Authenticating to a Gen 1 DataLakeStore Account

Gen 1 uses OAuth 2.0 in Azure AD for authentication.

For this, an Active Directory web application is required. You can create one as follows:

To authenticate against a Gen 1 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen1.

- Account: Set this to the name of the account.

- OAuthClientId: Set this to the application Id of the app you created.

- OAuthClientSecret: Set this to the key generated for the app you created.

- TenantId: Set this to the tenant Id. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Authenticating to a Gen 2 DataLakeStore Account

To authenticate against a Gen 2 DataLakeStore account, the following properties are required:

- Schema: Set this to ADLSGen2.

- Account: Set this to the name of the account.

- FileSystem: Set this to the file system which will be used for this account.

- AccessKey: Set this to the access key which will be used to authenticate the calls to the API. See the property for more information on how to acquire this.

- Directory: Set this to the path which will be used to store the replicated file. If not specified, the root directory will be used.

Creating a Data Source for Azure Data Lake Storage

Start by creating a new Analysis Services Tabular Project in Visual Studio. Next create a Data Source for Azure Data Lake Storage in the project.

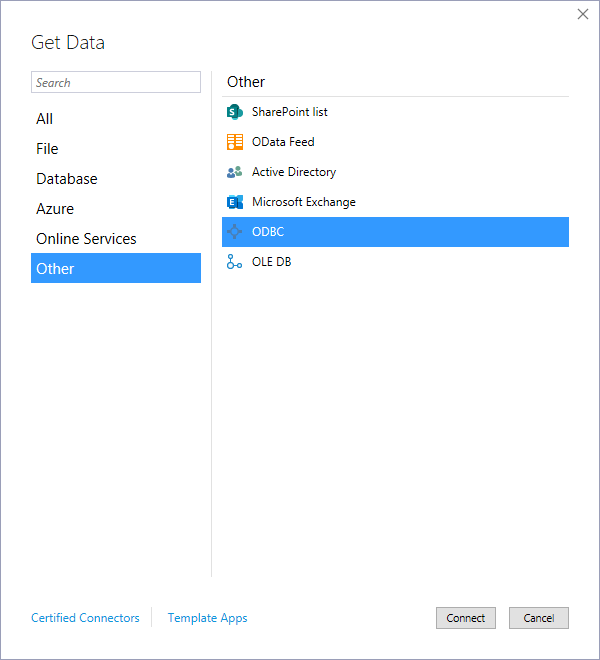

- In the Tabular Model Explorer, right-click Data Sources and select "New Data Source"

- Select "ODBC" from the Other tab and click "Connect"

![Selecting ODBC as the connector]()

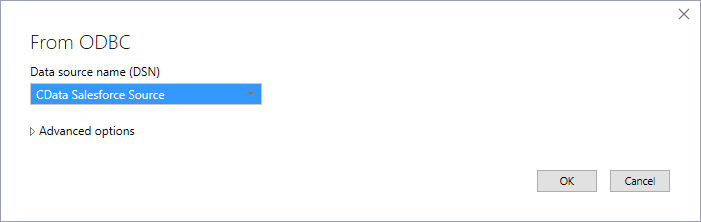

- Select the DSN you previously configured

![Selecting the DSN (Salesforce is shown)]()

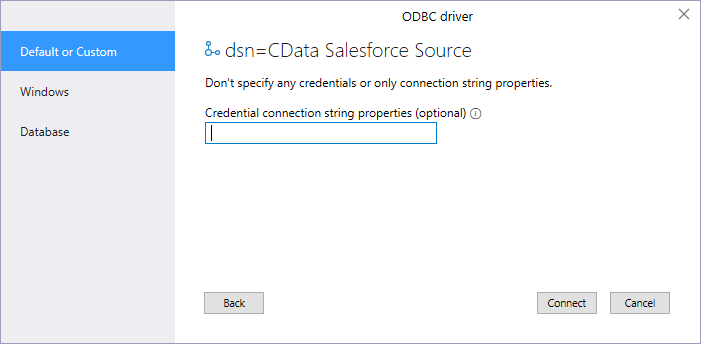

- Choose "Default or Custom" as the authentication option and click "Connect"

![Connecting to the DSN (Salesforce is shown)]()

Add Tables & Relationships

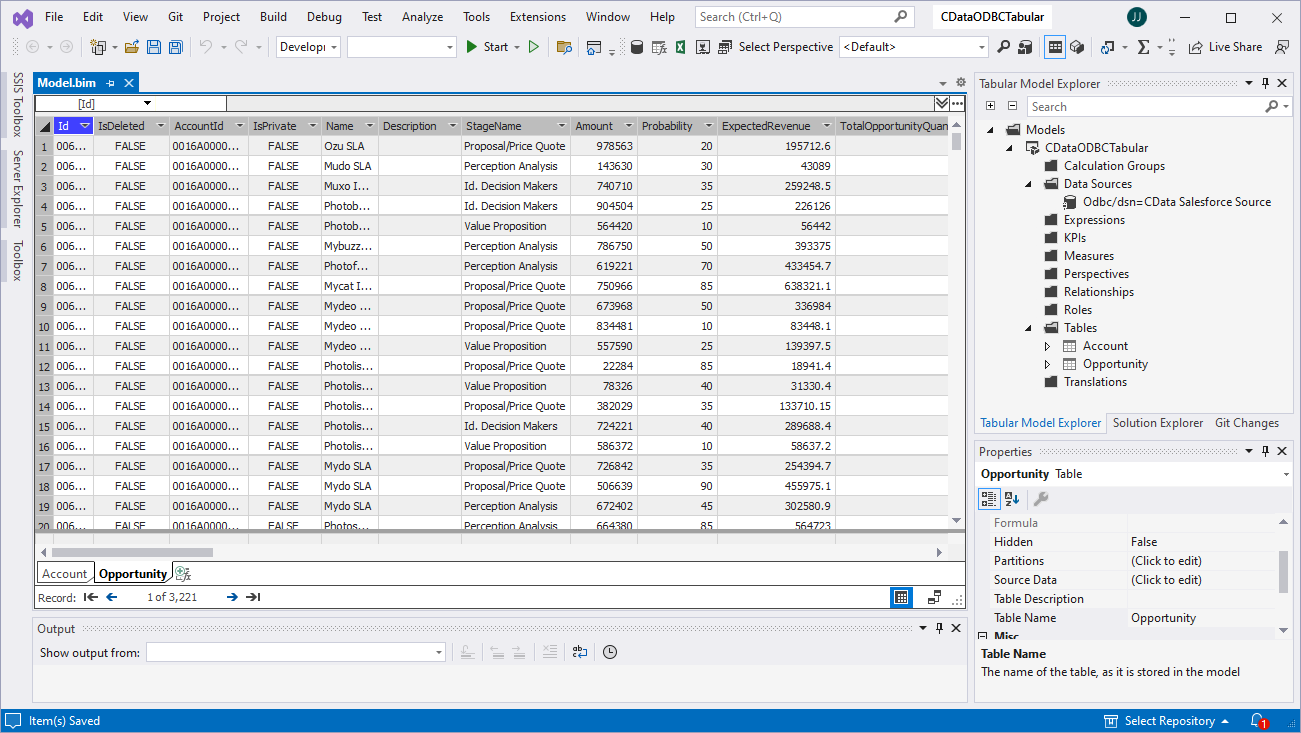

After creating the data source you are ready to import tables and define the relationships between the tables.

- Right-click the new data source, click "Import New Tables" and select the tables to import

![Importing the tables (Salesforce is shown)]()

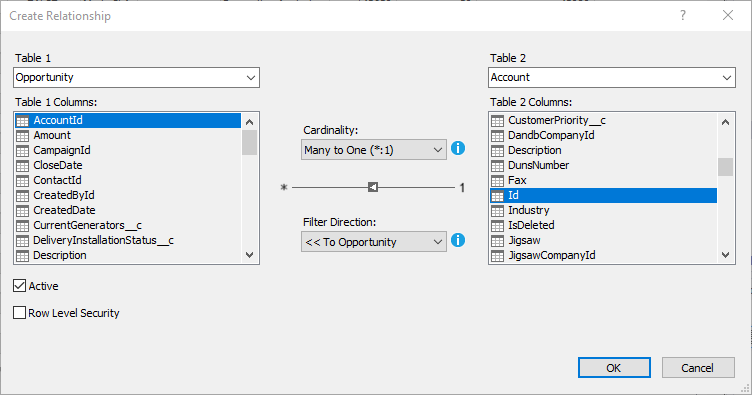

- After importing the tables, right-click "Relationships" and click "Create Relationships"

- Select table(s), and choose the foreign keys, cardinality, and filter direction

![Configuring relationships between tables (Salesforce is shown)]()

Create Measures

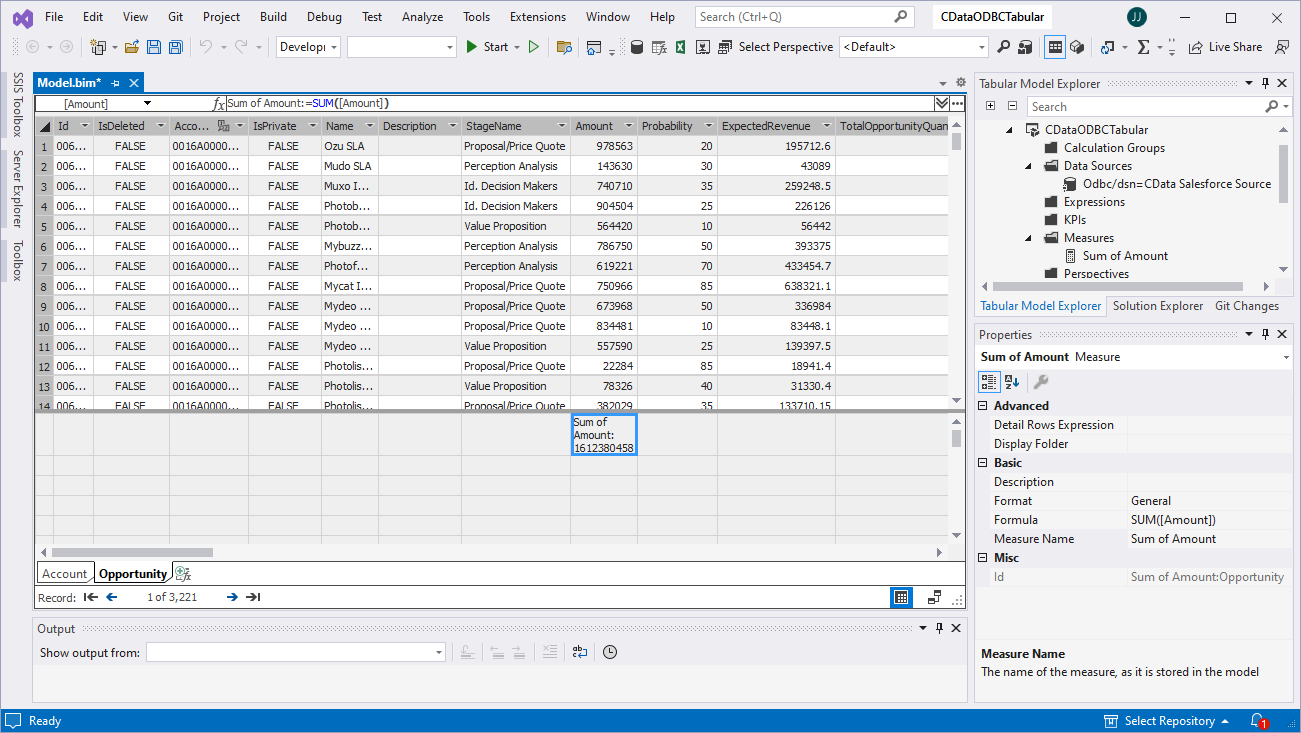

After importing the tables and defining the relationships, you are ready to create measures.

- Select the column in the table for which you wish to create a measure

- In the Extensions menu -> click "Columns" -> "AutoSum" and select your aggregation method

![Creating measures (Salesforce is shown)]()

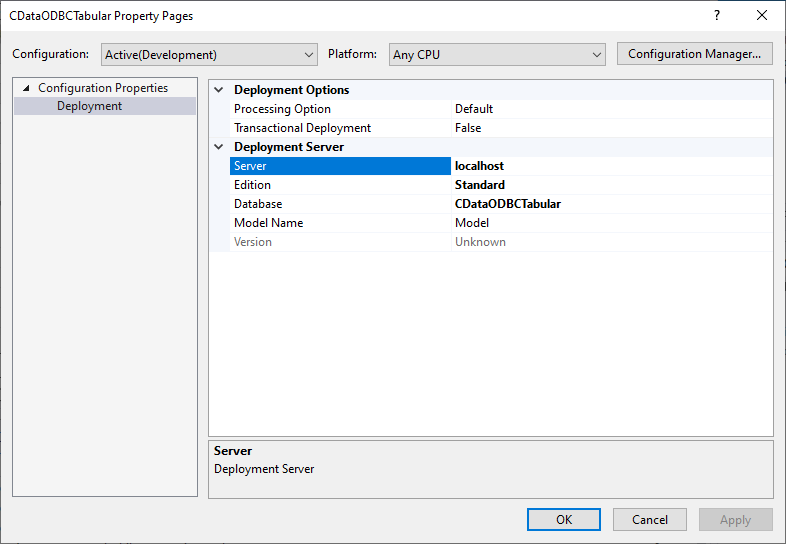

Deploy the Model

Once you create measures, you are ready to deploy the model. Configure the target server and database by right-clicking the project found in the Solution Explorer and selecting "Properties." Configure the "Deployment Server" properties and click "OK."

After configuring the deployment server, open the "Build" menu and click "Deploy Solution." You now have a tabular model for Azure Data Lake Storage data in your SSAS instance, ready to be analyzed, reported, and viewed. Get started with a free, 30-day trial of the CData ODBC Driver for Azure Data Lake Storage.