Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Query Databricks Data in DataGrip

Create a Data Source for Databricks in DataGrip and use SQL to query live Databricks data.

DataGrip is a database IDE that allows SQL developers to query, create, and manage databases. When paired with the CData JDBC Driver for Databricks, DataGrip can work with live Databricks data. This article shows how to establish a connection to Databricks data in DataGrip and use the table editor to load Databricks data.

About Databricks Data Integration

Accessing and integrating live data from Databricks has never been easier with CData. Customers rely on CData connectivity to:

- Access all versions of Databricks from Runtime Versions 9.1 - 13.X to both the Pro and Classic Databricks SQL versions.

- Leave Databricks in their preferred environment thanks to compatibility with any hosting solution.

- Secure authenticate in a variety of ways, including personal access token, Azure Service Principal, and Azure AD.

- Upload data to Databricks using Databricks File System, Azure Blog Storage, and AWS S3 Storage.

While many customers are using CData's solutions to migrate data from different systems into their Databricks data lakehouse, several customers use our live connectivity solutions to federate connectivity between their databases and Databricks. These customers are using SQL Server Linked Servers or Polybase to get live access to Databricks from within their existing RDBMs.

Read more about common Databricks use-cases and how CData's solutions help solve data problems in our blog: What is Databricks Used For? 6 Use Cases.

Getting Started

Create a New Driver Definition for Databricks

The steps below describe how to create a new Data Source in DataGrip for Databricks.

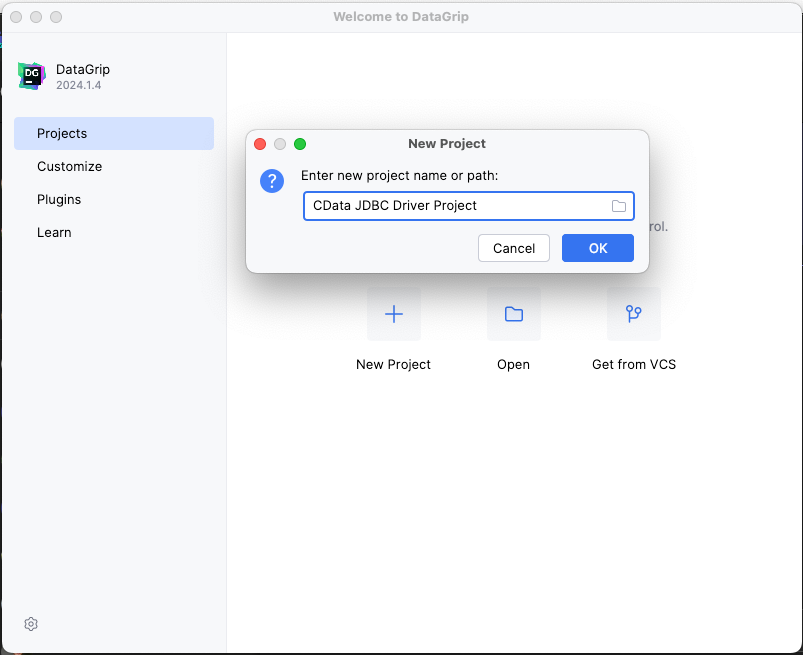

- In DataGrip, click File -> New > Project and name the project

![Creating a new DataGrip project.]()

- In the Database Explorer, click the plus icon () and select Driver.

![Adding a new Driver.]()

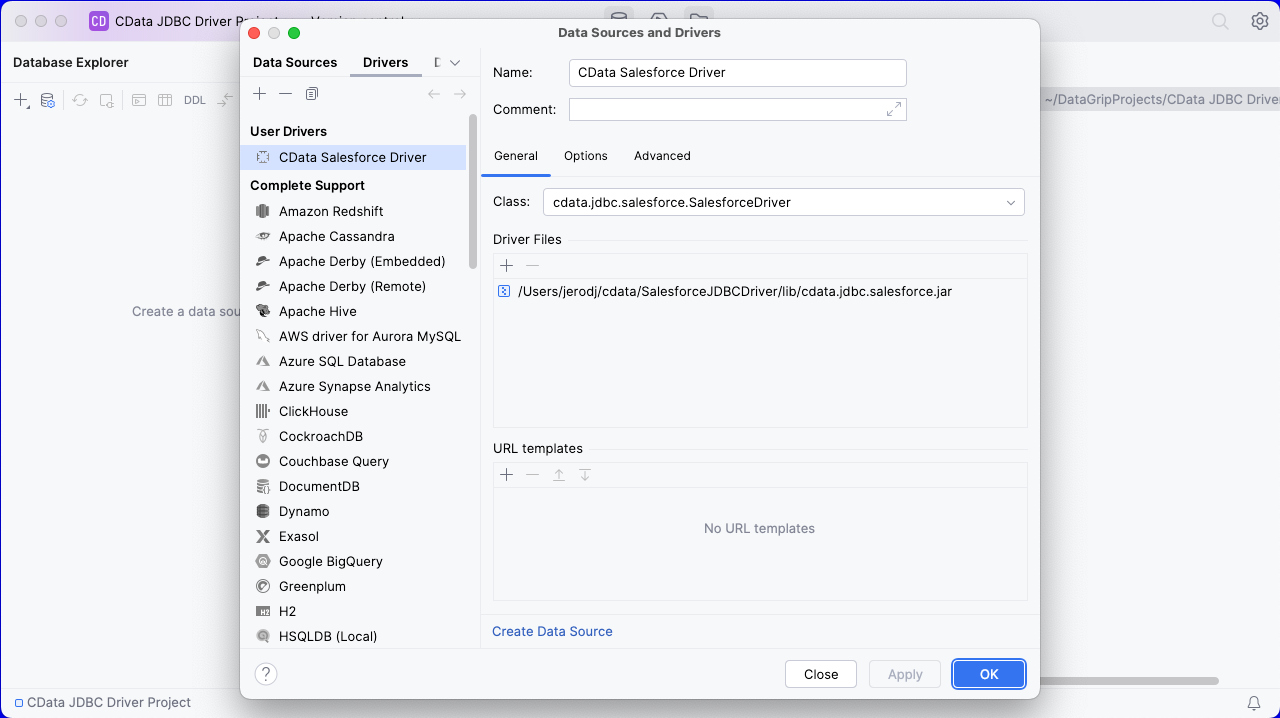

- In the Driver tab:

- Set Name to a user-friendly name (e.g. "CData Databricks Driver")

- Set Driver Files to the appropriate JAR file. To add the file, click the plus (), select "Add Files," navigate to the "lib" folder in the driver's installation directory and select the JAR file (e.g. cdata.jdbc.databricks.jar).

- Set Class to cdata.jdbc.databricks.Databricks.jar

Additionally, in the advanced tab you can change driver properties and some other settings like VM Options, VM environment, VM home path, DBMS, etc - For most cases, change the DBMS type to "Unknown" in Expert options to avoid native SQL Server queries (Transact-SQL), which might result in an invalid function error

- Click "Apply" then "OK" to save the Connection

![A configured Driver (Salesforce is shown).]()

Configure a Connection to Databricks

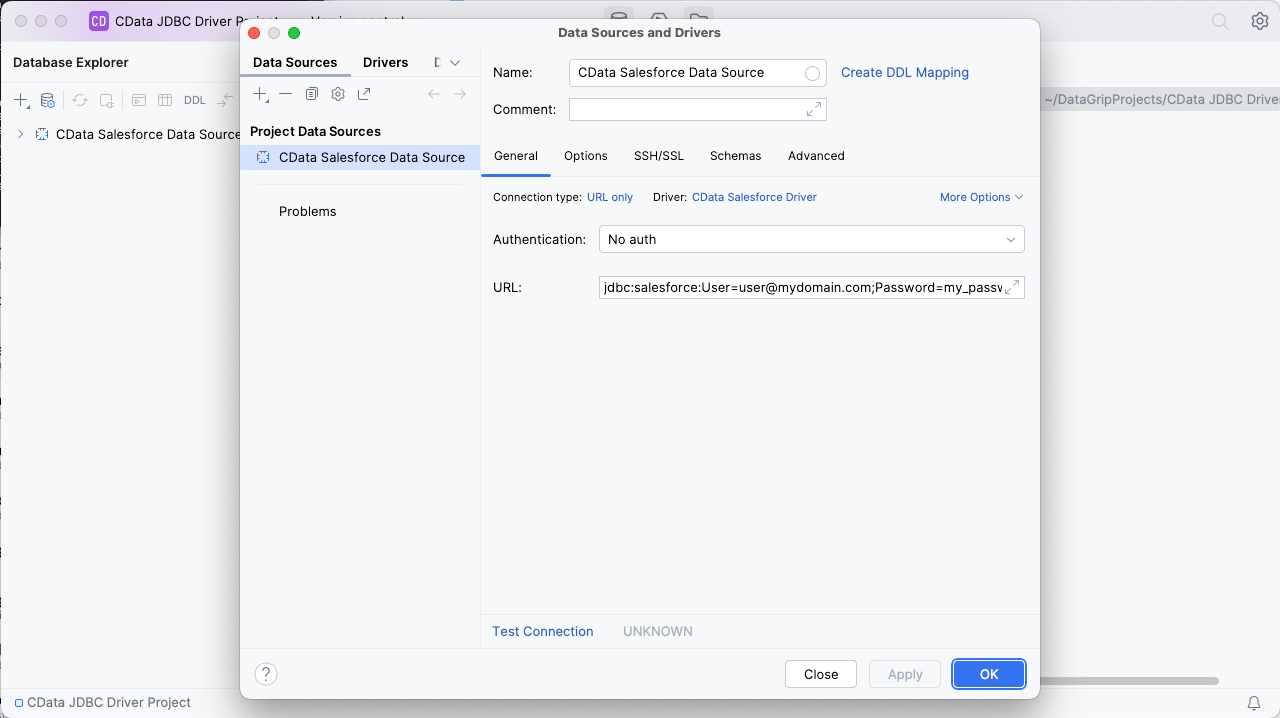

- Once the connection is saved, click the plus (), then "Data Source" then "CData Databricks Driver" to create a new Databricks Data Source.

- In the new window, configure the connection to Databricks with a JDBC URL.

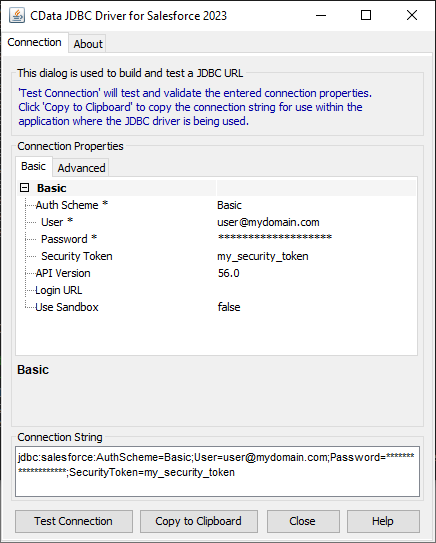

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Databricks JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.databricks.jarFill in the connection properties and copy the connection string to the clipboard.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

![Using the built-in connection string designer to generate a JDBC URL (Salesforce is shown.)]()

- Set URL to the connection string, e.g.,

jdbc:databricks:Server=127.0.0.1;Port=443;TransportMode=HTTP;HTTPPath=MyHTTPPath;UseSSL=True;User=MyUser;Password=MyPassword; - Click "Apply" and "OK" to save the connection string

![A configured Data Source (Salesforce is shown).]()

At this point, you will see the data source in the Data Explorer.

Execute SQL Queries Against Databricks

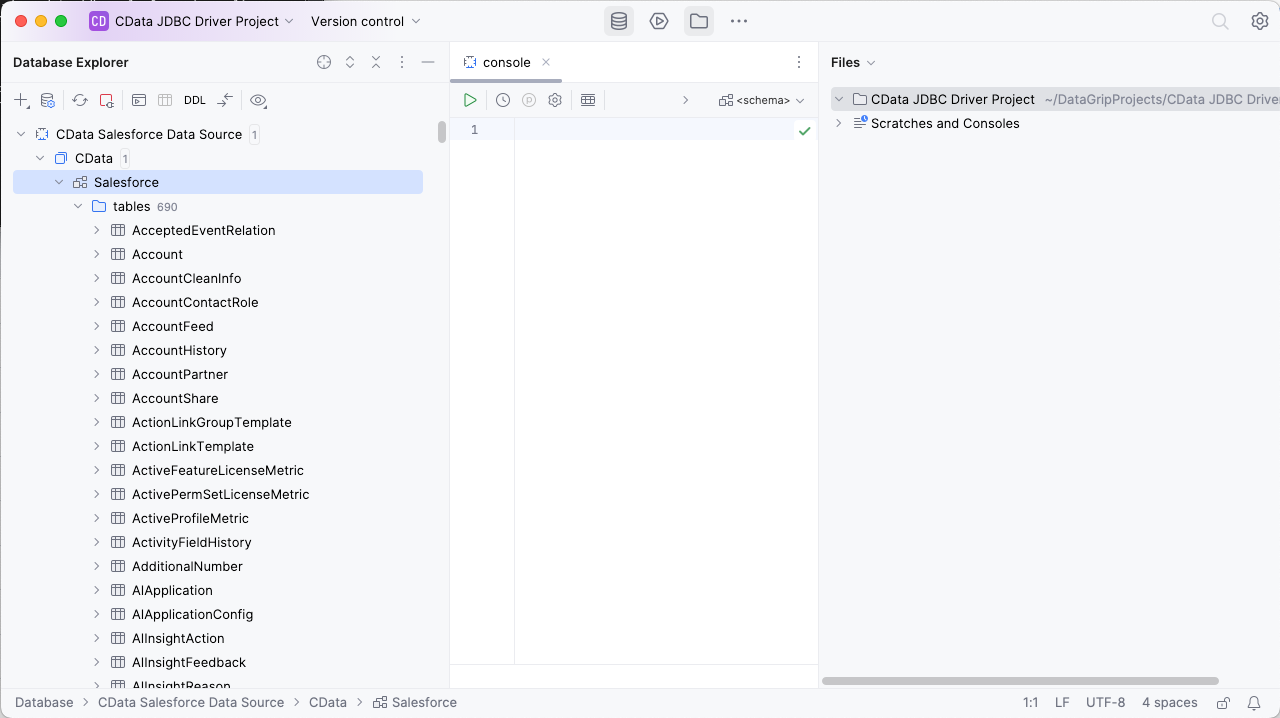

To browse through the Databricks entities (available as tables) accessible through the JDBC Driver, expand the Data Source.

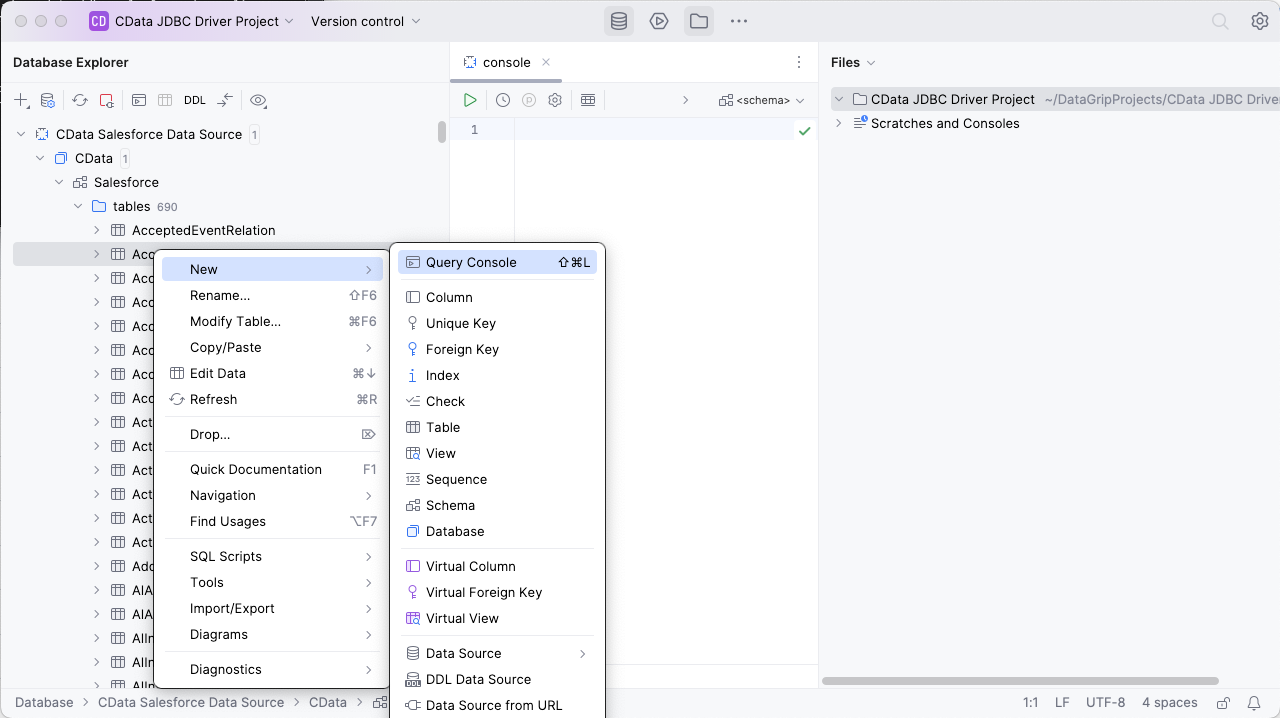

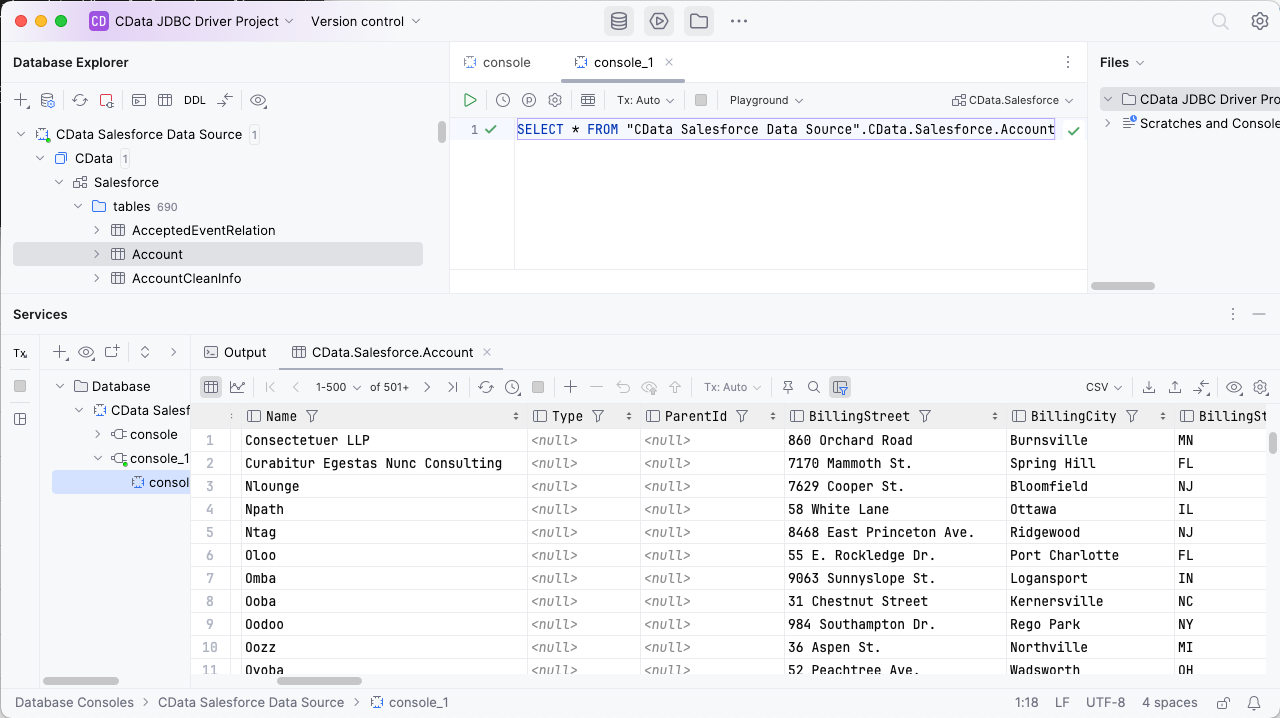

To execute queries, right click on any table and select "New" -> "Query Console."

In the Console, write the SQL query you wish to execute. For example: SELECT City, CompanyName FROM Customers WHERE Country = 'US'

Download a free, 30-day trial of the CData JDBC Driver for Databricks and start working with your live Databricks data in DataGrip. Reach out to our Support Team if you have any questions.