Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →How to Import Data from Databricks into Google Sheets

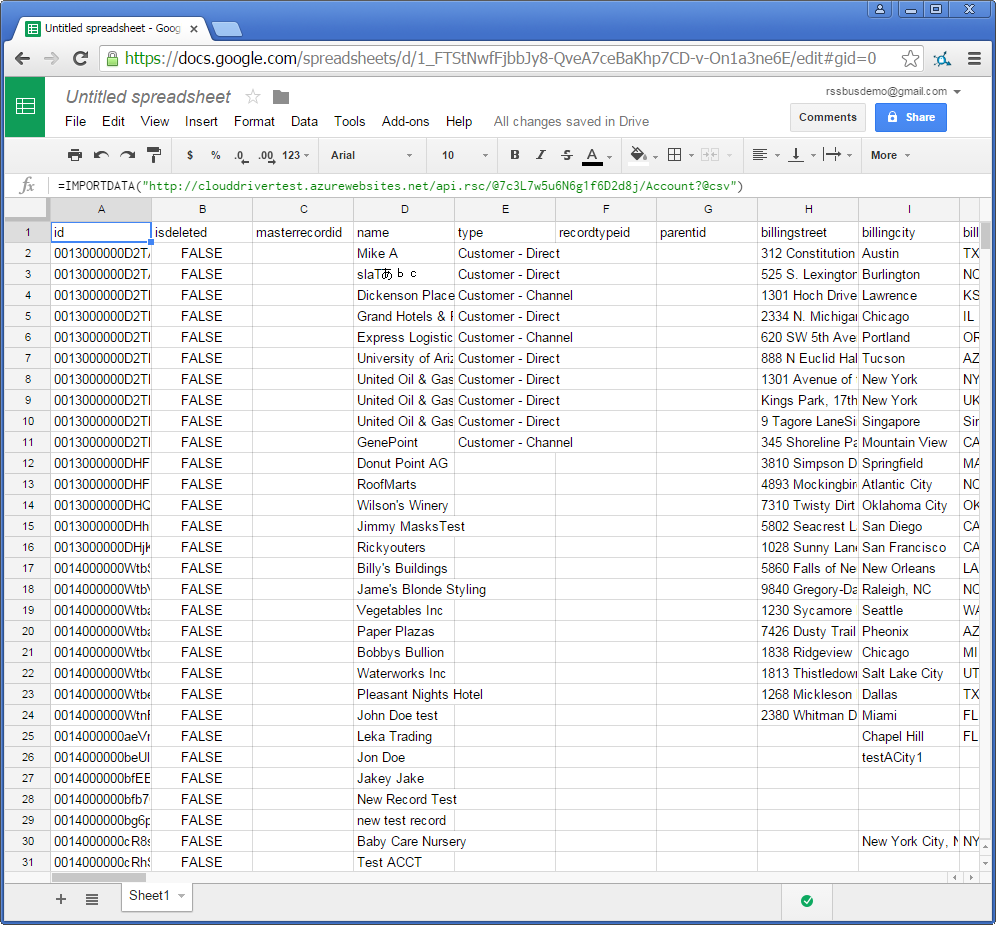

Import Databricks data into a Google spreadsheet that is automatically refreshed.

The CData API Server, when paired with the ADO.NET Provider for Databricks (or any of 200+ other ADO.NET Providers), connects cloud applications, mobile devices, and other online applications to Databricks data via Web services, the standards that enable real-time access to external data. Use Google Sheets' built-in support for Web services to rely on up-to-date Databricks data, automate manual processes like rekeying data, and avoid uploading files.

What is The API Server?

The API Server is a lightweight Web application that runs on your server and produces secure feeds of any of 200+ data sources. The .NET edition can be deployed to Azure in 3 steps. The Java edition can be deployed to Heroku as well as any Java servlet container. You can also easily deploy on an Amazon EC2 AMI.

About Databricks Data Integration

Accessing and integrating live data from Databricks has never been easier with CData. Customers rely on CData connectivity to:

- Access all versions of Databricks from Runtime Versions 9.1 - 13.X to both the Pro and Classic Databricks SQL versions.

- Leave Databricks in their preferred environment thanks to compatibility with any hosting solution.

- Secure authenticate in a variety of ways, including personal access token, Azure Service Principal, and Azure AD.

- Upload data to Databricks using Databricks File System, Azure Blog Storage, and AWS S3 Storage.

While many customers are using CData's solutions to migrate data from different systems into their Databricks data lakehouse, several customers use our live connectivity solutions to federate connectivity between their databases and Databricks. These customers are using SQL Server Linked Servers or Polybase to get live access to Databricks from within their existing RDBMs.

Read more about common Databricks use-cases and how CData's solutions help solve data problems in our blog: What is Databricks Used For? 6 Use Cases.

Getting Started

Why Web Services?

Google Sheets is one of the easiest ways to collaborate in real time, so it should be easy to collaboratively work with Databricks data and your other data sources in Google Sheets. However, it can be difficult to get data into Google Sheets. Often, a manual process is required, such as rekeying data or uploading CSV files. Working with a copy rather than the external data, the spreadsheet quickly becomes out of date.

The API Server provides an alternative to physically transferring data into and out of Google Sheets. The API Server provides native read/write connectivity to external Databricks data from both built-in formulas and Google Apps Script.

Instead of manually copying your data, formatting it, and uploading a spreadsheet, retrieving Databricks data into Google Sheets is as simple as using the ImportData formula to make calls to the API Server. The API Server exposes the capabilities of the Databricks API as standard OData queries. The sheet will automatically retrieve updates by periodically executing the query. Below is an example of a search.

https://MyServer:MyPort/api.rsc/Customers/?filter=Country eq 'US'

See the API page in the API Server administration console for more information on the supported OData.

Are Web Services Secure?

The CData API Server, when paired with any of 200+ other ADO.NET Providers, helps you control access to your data. Unlike data integration services hosted in the cloud, the API Server runs on servers you control; it can run inside or outside the firewall.

You can also use the API Server to take advantage of standard technology for protecting the confidentiality, authenticity, and intended recipients of your data. The API Server supports TLS/SSL and the major forms of authentication. The .NET edition supports standard ASP.NET security. The Java edition is integrated with J2EE security.

How to Retrieve External Databricks Data in Google Sheets

You can consume Databricks data in Google Sheets in 3 steps:

If you have not already connected successfully in the API Server administration console, see the "Getting Started" chapter in the help documentation for a guide.

Authenticate your query with the authtoken of a user authorized to access the OData endpoint of the API Server. The API Server also restricts access based on IP address; you will need to enable access from Google's servers. You can configure access controls on the Security tab.

The ImportData function takes one parameter, the URL, so authentication must be supplied in the URL. For security reasons, the API Server does not allow setting the authtoken in the URL by default, so you will need to enable this by adding the following in settings.cfg. If you would like to use another authentication scheme, like HTTP Basic, see the Databricks and Google Apps Script how-to.

[Application] AllowAuthTokenInUrl = trueThe settings.cfg file is located in the data directory. In the .NET edition, the data directory is the app_data folder under the www folder. In the Java edition, the data directory's location depends on the operation system:

- Windows: C:\ProgramData\CData\Databricks\

- Unix or Mac OS X: ~/cdata/Databricks/

In a new Google sheet, use the ImportData formula to request the CSV file from the OData endpoint of the API Server. Specify the format of the response with the @csv query string parameter. Google will periodically update the results of the formula, ensuring that the sheet contains up-to-date data. You can request the entire Customers table with a formula like the one below:

=ImportData("https://your-server/api.rsc/Customers?@csv&@authtoken=your-authtoken")