Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Automate Tasks in Power Automate Using the CData API Server and Databricks ADO.NET Provider

Automate actions like sending emails to a contact list, posting to social media, or syncing CRM and ERP.

Power Automate (Microsoft Flow) makes it easy to automate tasks that involve data from multiple systems, on premises or in the cloud. With the CData API Server and Databricks ADO.NET Provider (or any of 200+ other ADO.NET Providers), line-of-business users have a native way to create actions based on Databricks triggers in Power Automate; the API Server makes it possible for SaaS applications like Power Automate to integrate seamlessly with Databricks data through data access standards like Swagger and OData. This article shows how to use wizards in Power Automate and the API Server for Databricks to create a trigger -- entities that match search criteria -- and send an email based on the results.

About Databricks Data Integration

Accessing and integrating live data from Databricks has never been easier with CData. Customers rely on CData connectivity to:

- Access all versions of Databricks from Runtime Versions 9.1 - 13.X to both the Pro and Classic Databricks SQL versions.

- Leave Databricks in their preferred environment thanks to compatibility with any hosting solution.

- Secure authenticate in a variety of ways, including personal access token, Azure Service Principal, and Azure AD.

- Upload data to Databricks using Databricks File System, Azure Blog Storage, and AWS S3 Storage.

While many customers are using CData's solutions to migrate data from different systems into their Databricks data lakehouse, several customers use our live connectivity solutions to federate connectivity between their databases and Databricks. These customers are using SQL Server Linked Servers or Polybase to get live access to Databricks from within their existing RDBMs.

Read more about common Databricks use-cases and how CData's solutions help solve data problems in our blog: What is Databricks Used For? 6 Use Cases.

Getting Started

Set Up the API Server

Follow the steps below to begin producing secure and Swagger-enabled Databricks APIs:

Deploy

The API Server runs on your own server. On Windows, you can deploy using the stand-alone server or IIS. On a Java servlet container, drop in the API Server WAR file. See the help documentation for more information and how-tos.

The API Server is also easy to deploy on Microsoft Azure, Amazon EC2, and Heroku.

Connect to Databricks

After you deploy, provide authentication values and other connection properties by clicking Settings -> Connections in the API Server administration console. You can then choose the entities you want to allow the API Server access to by clicking Settings -> Resources.

To connect to a Databricks cluster, set the properties as described below.

Note: The needed values can be found in your Databricks instance by navigating to Clusters, and selecting the desired cluster, and selecting the JDBC/ODBC tab under Advanced Options.

- Server: Set to the Server Hostname of your Databricks cluster.

- HTTPPath: Set to the HTTP Path of your Databricks cluster.

- Token: Set to your personal access token (this value can be obtained by navigating to the User Settings page of your Databricks instance and selecting the Access Tokens tab).

You will also need to enable CORS and define the following sections on the Settings -> Server page. As an alternative, you can select the option to allow all domains without '*'.

- Access-Control-Allow-Origin: Set this to a value of '*' or specify the domains that are allowed to connect.

- Access-Control-Allow-Methods: Set this to a value of "GET,PUT,POST,OPTIONS".

- Access-Control-Allow-Headers: Set this to "x-ms-client-request-id, authorization, content-type".

Authorize API Server Users

After determining the OData services you want to produce, authorize users by clicking Settings -> Users. The API Server uses authtoken-based authentication and supports the major authentication schemes. You can authenticate as well as encrypt connections with SSL. Access can also be restricted by IP address; access is restricted to only the local machine by default.

For simplicity, we will allow the authtoken for API users to be passed in the URL. You will need to add a setting in the Application section of the settings.cfg file, located in the data directory. On Windows, this is the app_data subfolder in the application root. In the Java edition, the location of the data directory depends on your operation system:

- Windows: C:\ProgramData\CData

- Unix or Mac OS X: ~/cdata

[Application]

AllowAuthtokenInURL = true

Add Databricks Data to a Flow

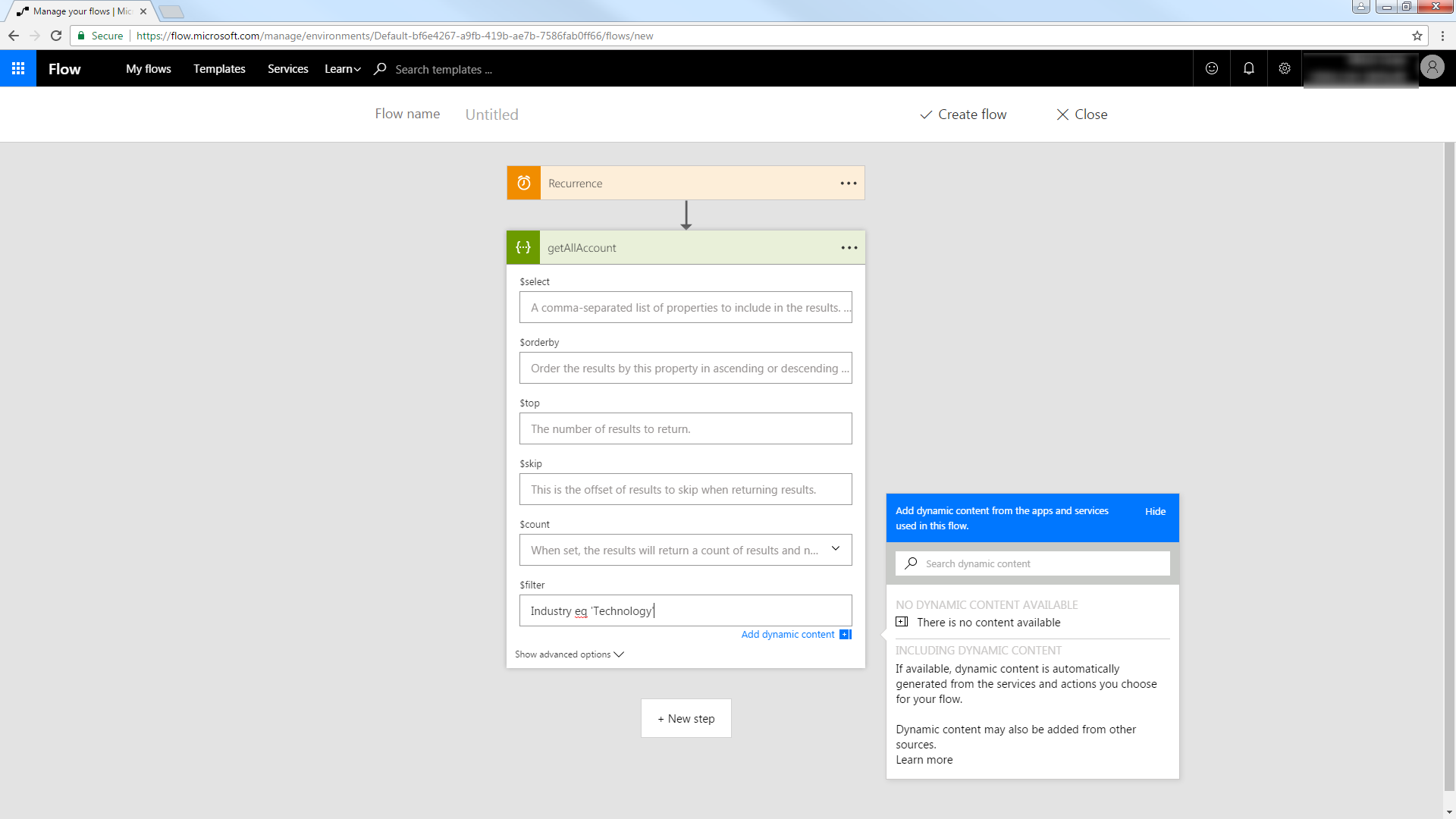

You can use the built-in HTTP + Swagger connector to use a wizard to design a Databricks process flow:

- In Power Automate, click My Flows -> Create from Blank.

- Select the Recurrence action and select a time interval for sending emails. This article uses 1 day.

- Add an HTTP + Swagger action by searching for Swagger.

- Enter the URL to the Swagger metadata document:

https://MySite:MyPort/api.rsc/@MyAuthtoken/$oas - Select the "Return Customers" operation.

Build the OData query to retrieve Databricks data. This article defines the following OData filter expression in the $filter box:

Country eq 'US'

See the API Server help documentation for more on filtering and examples of the supported OData.

Trigger an Action

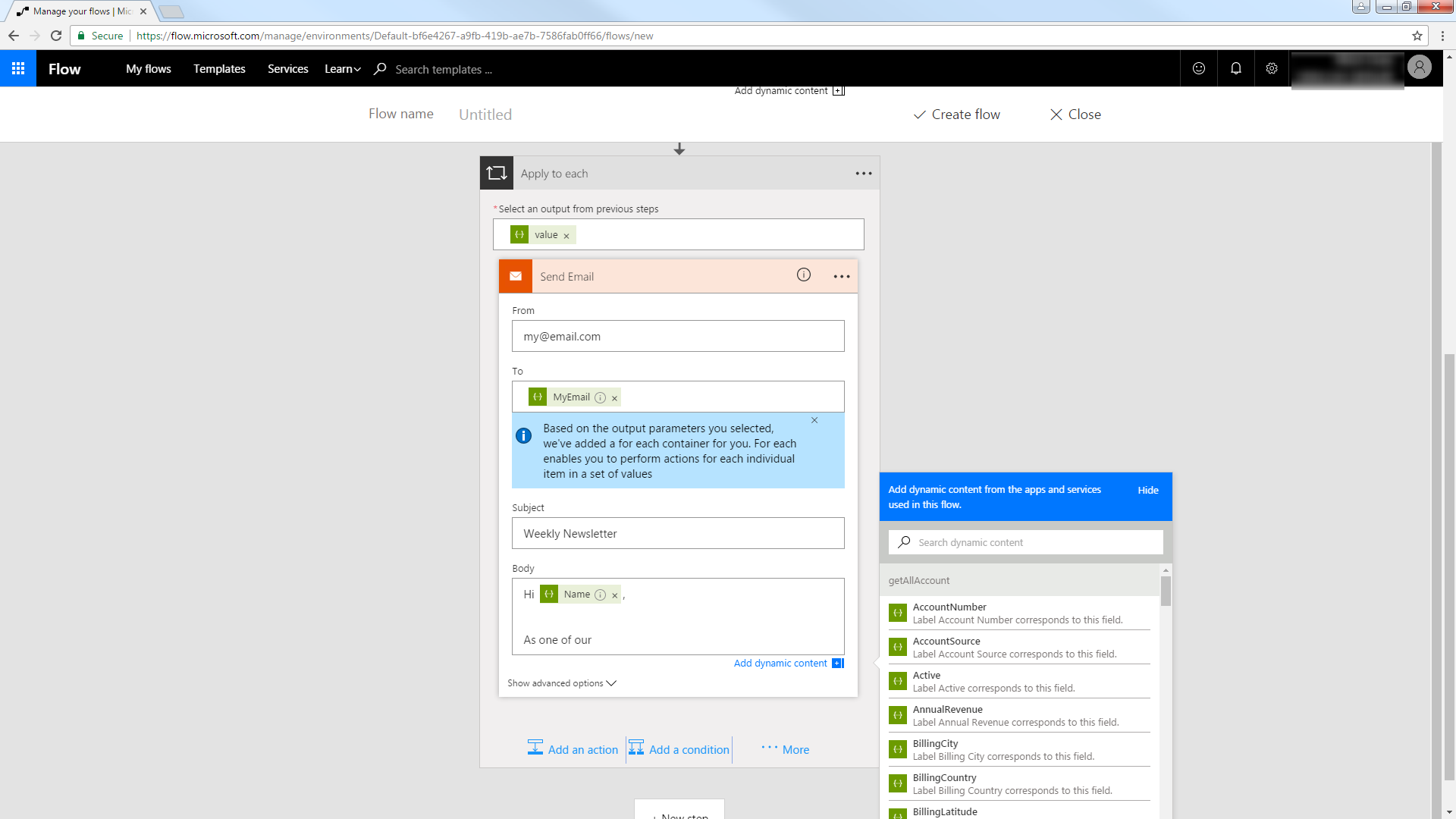

You can now work with Customers entities in your process flow. Follow the steps to send an automated email:

- Add an SMTP - Send Email action.

- Enter the address and credentials for the SMTP server and name the connection. Be sure to enable encryption if supported by your server.

- Enter the message headers and body. You can add Databricks columns in these boxes.