Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →How to Work with PingOne Data in AWS Glue Jobs Using JDBC

Connect to PingOne from AWS Glue jobs using the CData JDBC Driver hosted in Amazon S3.

AWS Glue is an ETL service from Amazon that allows you to easily prepare and load your data for storage and analytics. Using the PySpark module along with AWS Glue, you can create jobs that work with data over JDBC connectivity, loading the data directly into AWS data stores. In this article, we walk through uploading the CData JDBC Driver for PingOne into an Amazon S3 bucket and creating and running an AWS Glue job to extract PingOne data and store it in S3 as a CSV file.

Upload the CData JDBC Driver for PingOne to an Amazon S3 Bucket

In order to work with the CData JDBC Driver for PingOne in AWS Glue, you will need to store it (and any relevant license files) in an Amazon S3 bucket.

- Open the Amazon S3 Console.

- Select an existing bucket (or create a new one).

- Click Upload

- Select the JAR file (cdata.jdbc.pingone.jar) found in the lib directory in the installation location for the driver.

Configure the Amazon Glue Job

- Navigate to ETL -> Jobs from the AWS Glue Console.

- Click Add Job to create a new Glue job.

- Fill in the Job properties:

- Name: Fill in a name for the job, for example: PingOneGlueJob.

- IAM Role: Select (or create) an IAM role that has the AWSGlueServiceRole and AmazonS3FullAccess permissions policies. The latter policy is necessary to access both the JDBC Driver and the output destination in Amazon S3.

- Type: Select "Spark".

- Glue Version: Select "Spark 2.4, Python 3 (Glue Version 1.0)".

- This job runs: Select "A new script to be authored by you".

Populate the script properties: - Script file name: A name for the script file, for example: GluePingOneJDBC

- S3 path where the script is stored: Fill in or browse to an S3 bucket.

- Temporary directory: Fill in or browse to an S3 bucket.

- Expand Security configuration, script libraries and job parameters (optional). For Dependent jars path, fill in or browse to the S3 bucket where you uploaded the JAR file. Be sure to include the name of the JAR file itself in the path, i.e.: s3://mybucket/cdata.jdbc.pingone.jar

- Click Next. Here you will have the option to add connection to other AWS endpoints. So, if your Destination is Redshift, MySQL, etc, you can create and use connections to those data sources.

- Click "Save job and edit script" to create the job.

- In the editor that opens, write a python script for the job. You can use the sample script (see below) as an example.

Sample Glue Script

To connect to PingOne using the CData JDBC driver, you will need to create a JDBC URL, populating the necessary connection properties. Additionally, you will need to set the RTK property in the JDBC URL (unless you are using a Beta driver). You can view the licensing file included in the installation for information on how to set this property.

To connect to PingOne, configure these properties:

- Region: The region where the data for your PingOne organization is being hosted.

- AuthScheme: The type of authentication to use when connecting to PingOne.

- Either WorkerAppEnvironmentId (required when using the default PingOne domain) or AuthorizationServerURL, configured as described below.

Configuring WorkerAppEnvironmentId

WorkerAppEnvironmentId is the ID of the PingOne environment in which your Worker application resides. This parameter is used only when the environment is using the default PingOne domain (auth.pingone). It is configured after you have created the custom OAuth application you will use to authenticate to PingOne, as described in Creating a Custom OAuth Application in the Help documentation.

First, find the value for this property:

- From the home page of your PingOne organization, move to the navigation sidebar and click Environments.

- Find the environment in which you have created your custom OAuth/Worker application (usually Administrators), and click Manage Environment. The environment's home page displays.

- In the environment's home page navigation sidebar, click Applications.

- Find your OAuth or Worker application details in the list.

-

Copy the value in the Environment ID field.

It should look similar to:

WorkerAppEnvironmentId='11e96fc7-aa4d-4a60-8196-9acf91424eca'

Now set WorkerAppEnvironmentId to the value of the Environment ID field.

Configuring AuthorizationServerURL

AuthorizationServerURL is the base URL of the PingOne authorization server for the environment where your application is located. This property is only used when you have set up a custom domain for the environment, as described in the PingOne platform API documentation. See Custom Domains.

Authenticating to PingOne with OAuth

PingOne supports both OAuth and OAuthClient authentication. In addition to performing the configuration steps described above, there are two more steps to complete to support OAuth or OAuthCliet authentication:

- Create and configure a custom OAuth application, as described in Creating a Custom OAuth Application in the Help documentation.

- To ensure that the driver can access the entities in Data Model, confirm that you have configured the correct roles for the admin user/worker application you will be using, as described in Administrator Roles in the Help documentation.

- Set the appropriate properties for the authscheme and authflow of your choice, as described in the following subsections.

OAuth (Authorization Code grant)

Set AuthScheme to OAuth.

Desktop Applications

Get and Refresh the OAuth Access Token

After setting the following, you are ready to connect:

- InitiateOAuth: GETANDREFRESH. To avoid the need to repeat the OAuth exchange and manually setting the OAuthAccessToken each time you connect, use InitiateOAuth.

- OAuthClientId: The Client ID you obtained when you created your custom OAuth application.

- OAuthClientSecret: The Client Secret you obtained when you created your custom OAuth application.

- CallbackURL: The redirect URI you defined when you registered your custom OAuth application. For example: https://localhost:3333

When you connect, the driver opens PingOne's OAuth endpoint in your default browser. Log in and grant permissions to the application. The driver then completes the OAuth process:

- The driver obtains an access token from PingOne and uses it to request data.

- The OAuth values are saved in the location specified in OAuthSettingsLocation, to be persisted across connections.

The driver refreshes the access token automatically when it expires.

For other OAuth methods, including Web Applications, Headless Machines, or Client Credentials Grant, refer to the Help documentation.

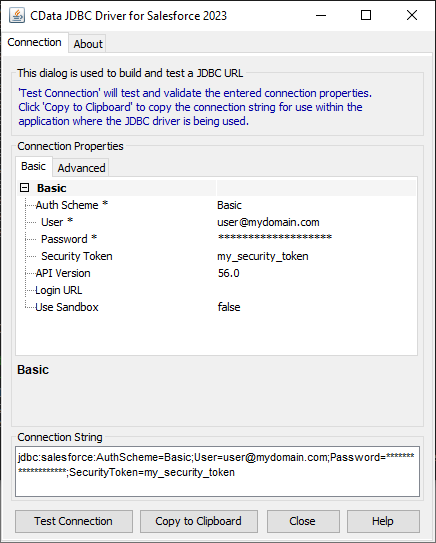

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the PingOne JDBC Driver. Either double-click the JAR file or execute the JAR file from the command-line.

java -jar cdata.jdbc.pingone.jar

Fill in the connection properties and copy the connection string to the clipboard.

To host the JDBC driver in Amazon S3, you will need a license (full or trial) and a Runtime Key (RTK). For more information on obtaining this license (or a trial), contact our sales team.

Below is a sample script that uses the CData JDBC driver with the PySpark and AWSGlue modules to extract PingOne data and write it to an S3 bucket in CSV format. Make any necessary changes to the script to suit your needs and save the job.

import sys

from awsglue.transforms import *

from awsglue.utils import getResolvedOptions

from pyspark.context import SparkContext

from awsglue.context import GlueContext

from awsglue.dynamicframe import DynamicFrame

from awsglue.job import Job

args = getResolvedOptions(sys.argv, ['JOB_NAME'])

sparkContext = SparkContext()

glueContext = GlueContext(sparkContext)

sparkSession = glueContext.spark_session

##Use the CData JDBC driver to read PingOne data from the [CData].[Administrators].Users table into a DataFrame

##Note the populated JDBC URL and driver class name

source_df = sparkSession.read.format("jdbc").option("url","jdbc:pingone:RTK=5246...;AuthScheme=OAuth;WorkerAppEnvironmentId=eebc33a8-xxxx-4f3a-yyyy-d3e5262fd49e;Region=NA;OAuthClientId=client_id;OAuthClientSecret=client_secret;").option("dbtable","[CData].[Administrators].Users").option("driver","cdata.jdbc.pingone.PingOneDriver").load()

glueJob = Job(glueContext)

glueJob.init(args['JOB_NAME'], args)

##Convert DataFrames to AWS Glue's DynamicFrames Object

dynamic_dframe = DynamicFrame.fromDF(source_df, glueContext, "dynamic_df")

##Write the DynamicFrame as a file in CSV format to a folder in an S3 bucket.

##It is possible to write to any Amazon data store (SQL Server, Redshift, etc) by using any previously defined connections.

retDatasink4 = glueContext.write_dynamic_frame.from_options(frame = dynamic_dframe, connection_type = "s3", connection_options = {"path": "s3://mybucket/outfiles"}, format = "csv", transformation_ctx = "datasink4")

glueJob.commit()

Run the Glue Job

With the script written, we are ready to run the Glue job. Click Run Job and wait for the extract/load to complete. You can view the status of the job from the Jobs page in the AWS Glue Console. Once the Job has succeeded, you will have a CSV file in your S3 bucket with data from the PingOne [CData].[Administrators].Users table.

Using the CData JDBC Driver for PingOne in AWS Glue, you can easily create ETL jobs for PingOne data, whether writing the data to an S3 bucket or loading it into any other AWS data store.