Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →Integrate Redshift Data in Pentaho Data Integration

Build ETL pipelines based on Redshift data in the Pentaho Data Integration tool.

The CData JDBC Driver for Amazon Redshift enables access to live data from data pipelines. Pentaho Data Integration is an Extraction, Transformation, and Loading (ETL) engine that data, cleanses the data, and stores data using a uniform format that is accessible.This article shows how to connect to Redshift data as a JDBC data source and build jobs and transformations based on Redshift data in Pentaho Data Integration.

Configure to Redshift Connectivity

To connect to Redshift, set the following:

- Server: Set this to the host name or IP address of the cluster hosting the Database you want to connect to.

- Port: Set this to the port of the cluster.

- Database: Set this to the name of the database. Or, leave this blank to use the default database of the authenticated user.

- User: Set this to the username you want to use to authenticate to the Server.

- Password: Set this to the password you want to use to authenticate to the Server.

You can obtain the Server and Port values in the AWS Management Console:

- Open the Amazon Redshift console (http://console.aws.amazon.com/redshift).

- On the Clusters page, click the name of the cluster.

- On the Configuration tab for the cluster, copy the cluster URL from the connection strings displayed.

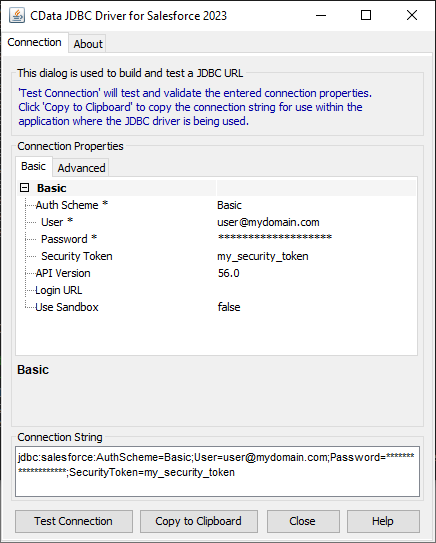

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the Redshift JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.redshift.jar

Fill in the connection properties and copy the connection string to the clipboard.

When you configure the JDBC URL, you may also want to set the Max Rows connection property. This will limit the number of rows returned, which is especially helpful for improving performance when designing reports and visualizations.

Below is a typical JDBC URL:

jdbc:redshift:User=admin;Password=admin;Database=dev;Server=examplecluster.my.us-west-2.redshift.amazonaws.com;Port=5439;

Save your connection string for use in Pentaho Data Integration.

Connect to Redshift from Pentaho DI

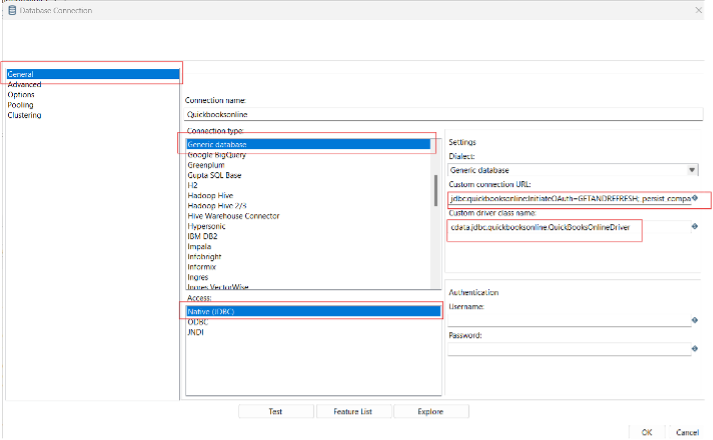

Open Pentaho Data Integration and select "Database Connection" to configure a connection to the CData JDBC Driver for Amazon Redshift

- Click "General"

- Set Connection name (e.g. Redshift Connection)

- Set Connection type to "Generic database"

- Set Access to "Native (JDBC)"

- Set Custom connection URL to your Redshift connection string (e.g.

jdbc:redshift:User=admin;Password=admin;Database=dev;Server=examplecluster.my.us-west-2.redshift.amazonaws.com;Port=5439; - Set Custom driver class name to "cdata.jdbc.redshift.RedshiftDriver"

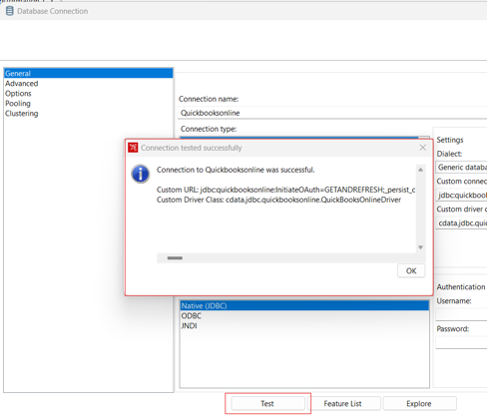

- Test the connection and click "OK" to save.

Create a Data Pipeline for Redshift

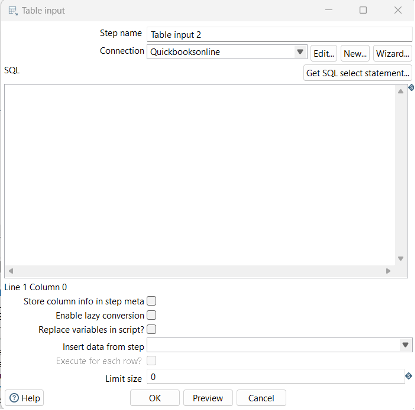

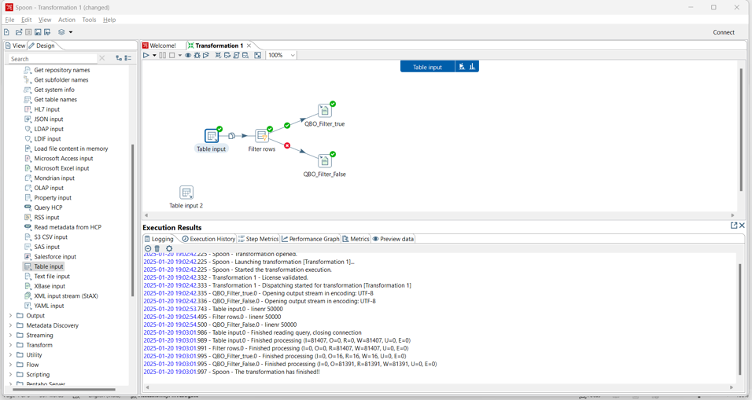

Once the connection to Redshift is configured using the CData JDBC Driver, you are ready to create a new transformation or job.

- Click "File" >> "New" >> "Transformation/job"

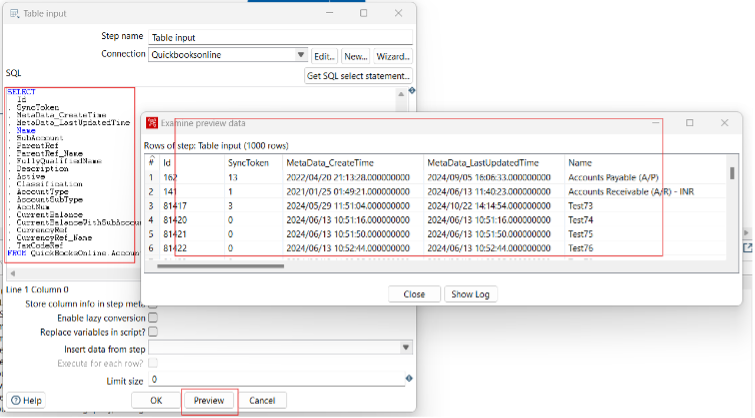

- Drag a "Table input" object into the workflow panel and select your Redshift connection.

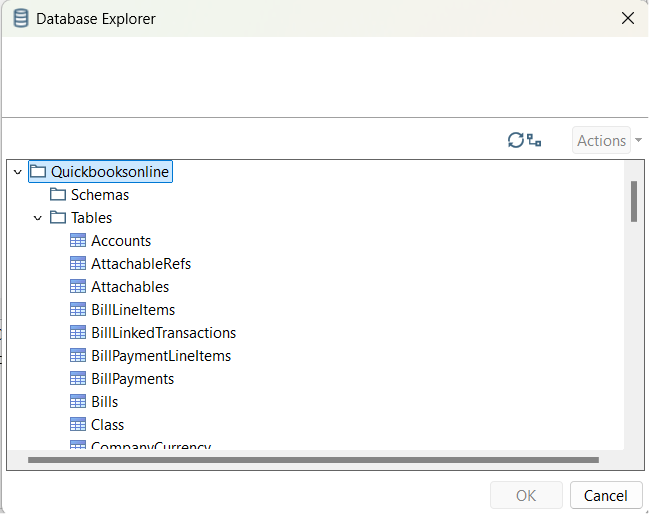

- Click "Get SQL select statement" and use the Database Explorer to view the available tables and views.

- Select a table and optionally preview the data for verification.

At this point, you can continue your transformation or jb by selecting a suitable destination and adding any transformations to modify, filter, or otherwise alter the data during replication.

Free Trial & More Information

Download a free, 30-day trial of the CData JDBC Driver for Amazon Redshift and start working with your live Redshift data in Pentaho Data Integration today.