Model Context Protocol (MCP) finally gives AI models a way to access the business data needed to make them really useful at work. CData MCP Servers have the depth and performance to make sure AI has access to all of the answers.

Try them now for free →How to work with SharePoint Data in Apache Spark using SQL

Access and process SharePoint Data in Apache Spark using the CData JDBC Driver.

Apache Spark is a fast and general engine for large-scale data processing. When paired with the CData JDBC Driver for SharePoint, Spark can work with live SharePoint data. This article describes how to connect to and query SharePoint data from a Spark shell.

The CData JDBC Driver offers unmatched performance for interacting with live SharePoint data due to optimized data processing built into the driver. When you issue complex SQL queries to SharePoint, the driver pushes supported SQL operations, like filters and aggregations, directly to SharePoint and utilizes the embedded SQL engine to process unsupported operations (often SQL functions and JOIN operations) client-side. With built-in dynamic metadata querying, you can work with and analyze SharePoint data using native data types.

About SharePoint Data Integration

Accessing and integrating live data from SharePoint has never been easier with CData. Customers rely on CData connectivity to:

- Access data from a wide range of SharePoint versions, including Windows SharePoint Services 3.0, Microsoft Office SharePoint Server 2007 and above, and SharePoint Online.

- Access all of SharePoint thanks to support for Hidden and Lookup columns.

- Recursively scan folders to create a relational model of all SharePoint data.

- Use SQL stored procedures to upload and download documents and attachments.

Most customers rely on CData solutions to integrate SharePoint data into their database or data warehouse, while others integrate their SharePoint data with preferred data tools, like Power BI, Tableau, or Excel.

For more information on how customers are solving problems with CData's SharePoint solutions, refer to our blog: Drivers in Focus: Collaboration Tools.

Getting Started

Install the CData JDBC Driver for SharePoint

Download the CData JDBC Driver for SharePoint installer, unzip the package, and run the JAR file to install the driver.

Start a Spark Shell and Connect to SharePoint Data

- Open a terminal and start the Spark shell with the CData JDBC Driver for SharePoint JAR file as the jars parameter:

$ spark-shell --jars /CData/CData JDBC Driver for SharePoint/lib/cdata.jdbc.sharepoint.jar - With the shell running, you can connect to SharePoint with a JDBC URL and use the SQL Context load() function to read a table.

Set the URL property to the base SharePoint site or to a sub-site. This allows you to query any lists and other SharePoint entities defined for the site or sub-site.

The User and Password properties, under the Authentication section, must be set to valid SharePoint user credentials when using SharePoint On-Premise.

If you are connecting to SharePoint Online, set the SharePointEdition to SHAREPOINTONLINE along with the User and Password connection string properties. For more details on connecting to SharePoint Online, see the "Getting Started" chapter of the help documentation

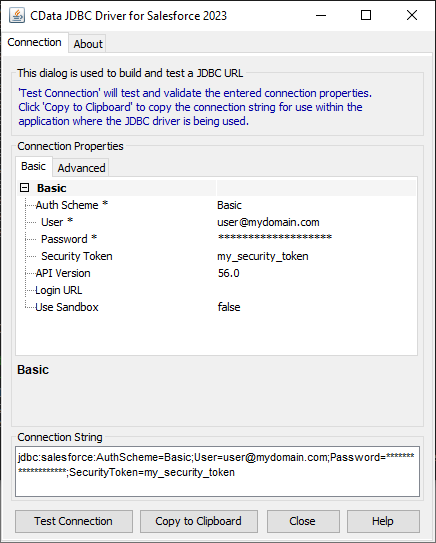

Built-in Connection String Designer

For assistance in constructing the JDBC URL, use the connection string designer built into the SharePoint JDBC Driver. Either double-click the JAR file or execute the jar file from the command-line.

java -jar cdata.jdbc.sharepoint.jarFill in the connection properties and copy the connection string to the clipboard.

![Using the built-in connection string designer to generate a JDBC URL (Salesforce is shown.)]()

Configure the connection to SharePoint, using the connection string generated above.

scala> val sharepoint_df = spark.sqlContext.read.format("jdbc").option("url", "jdbc:sharepoint:User=myuseraccount;Password=mypassword;Auth Scheme=NTLM;URL=http://sharepointserver/mysite;SharePointEdition=SharePointOnPremise;").option("dbtable","MyCustomList").option("driver","cdata.jdbc.sharepoint.SharePointDriver").load() - Once you connect and the data is loaded you will see the table schema displayed.

Register the SharePoint data as a temporary table:

scala> sharepoint_df.registerTable("mycustomlist")-

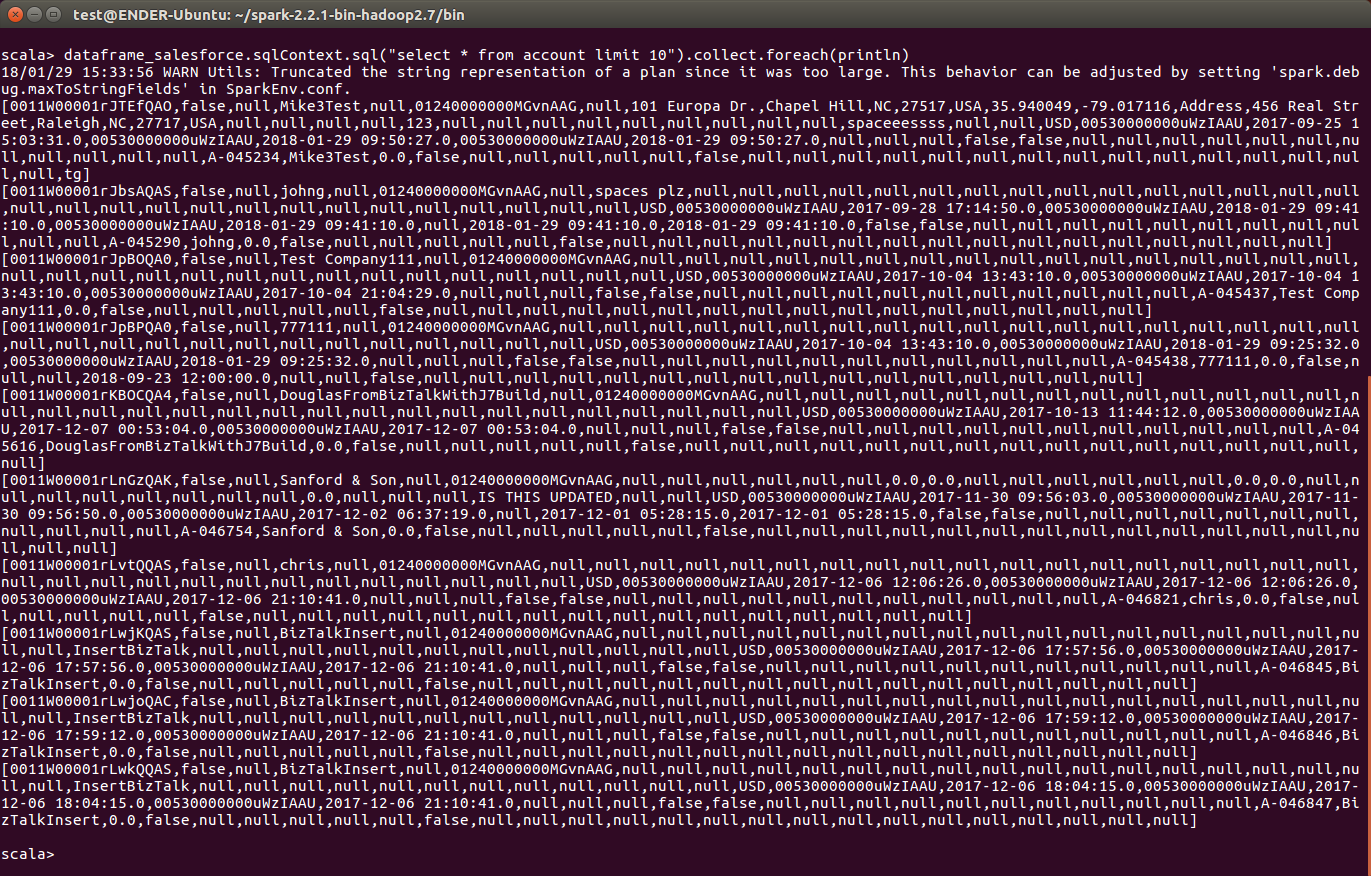

Perform custom SQL queries against the Data using commands like the one below:

scala> sharepoint_df.sqlContext.sql("SELECT Name, Revenue FROM MyCustomList WHERE Location = Chapel Hill").collect.foreach(println)You will see the results displayed in the console, similar to the following:

![Data in Apache Spark (Salesforce is shown)]()

Using the CData JDBC Driver for SharePoint in Apache Spark, you are able to perform fast and complex analytics on SharePoint data, combining the power and utility of Spark with your data. Download a free, 30 day trial of any of the 200+ CData JDBC Drivers and get started today.